[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Avi Chawla [@_avichawla](/creator/twitter/_avichawla) on x 42.3K followers Created: 2025-07-27 06:30:24 UTC To understand KV caching, we must know how LLMs output tokens. - Transformer produces hidden states for all tokens. - Hidden states are projected to the vocab space. - Logits of the last token are used to generate the next token. - Repeat for subsequent tokens. Check this👇  XXXXXX engagements  **Related Topics** [token](/topic/token) [$avijo](/topic/$avijo) [Post Link](https://x.com/_avichawla/status/1949356680871067925)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Avi Chawla @_avichawla on x 42.3K followers

Created: 2025-07-27 06:30:24 UTC

Avi Chawla @_avichawla on x 42.3K followers

Created: 2025-07-27 06:30:24 UTC

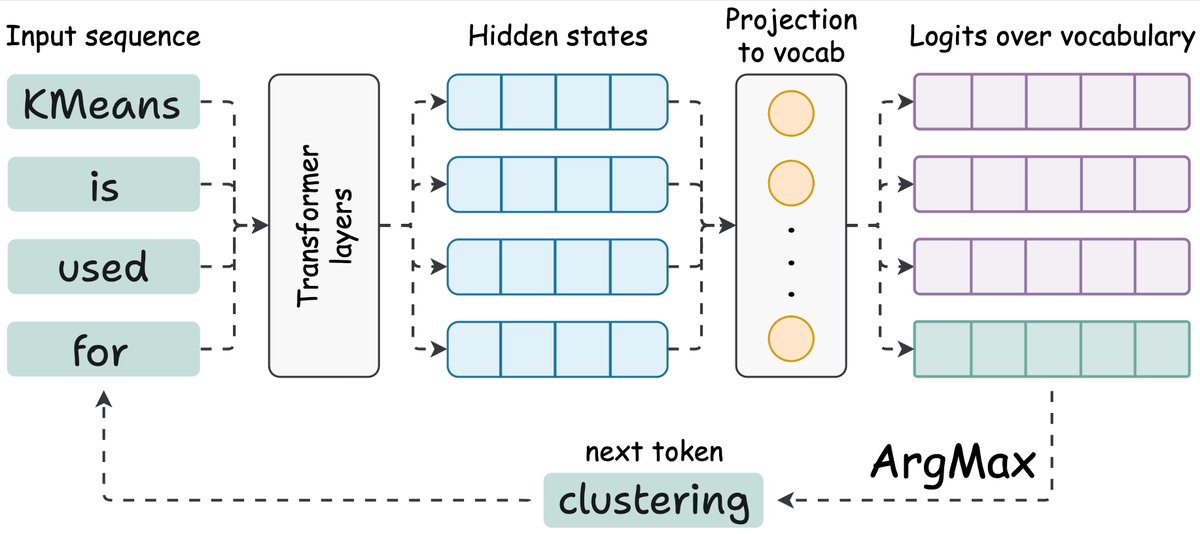

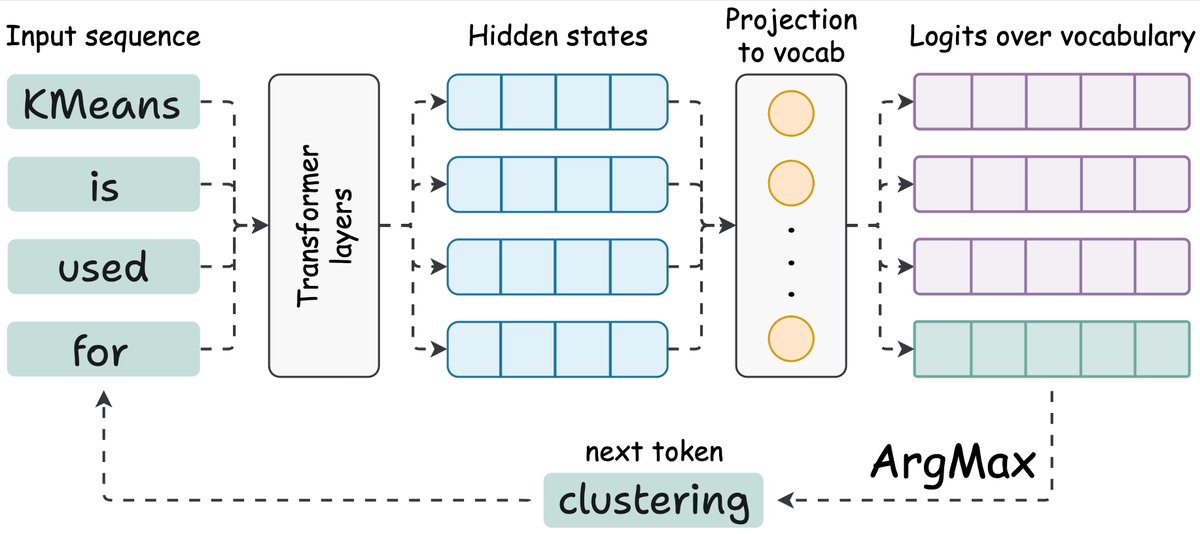

To understand KV caching, we must know how LLMs output tokens.

- Transformer produces hidden states for all tokens.

- Hidden states are projected to the vocab space.

- Logits of the last token are used to generate the next token.

- Repeat for subsequent tokens.

Check this👇

XXXXXX engagements