[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  ℏεsam [@Hesamation](/creator/twitter/Hesamation) on x 31.3K followers Created: 2025-07-24 08:02:22 UTC longer context doesn't generate better responses. it can even hurt your llm/agent. 1M context window doesn't automatically make models smarter as it's not about the size; it's how you use it. here are X types of context failure and why each one happens: X. context poisoning: if hallucination finds its way into your context, the agent will rely on that false information to make its future moves. for example if the agent hallucinates about the "task description", all of its planning to solve the task would also be corrupt. X. context distraction: when the context becomes too bloated, the model focuses too much on it rather than come up with novel ideas or to follow what it has learned during training. as Gemini XXX Pro technical report points out, as context grows significantly from 100K tokens, "the agent showed a tendency toward favoring repeating actions from its vast history rather than synthesizing novel plans". X. context confusion: everyone lost it when MCPs became popular, it seemed like AGI was achieved. I suspected there is something wrong and there was: it's not just about providing tools, bloating the context with tool use derails the model from selecting the right one! even if you can fit all your tool metadata in the context, as their number grows, the model gets confused over which one to pick. X. Context Clash: if you exchange conversation with a model step by step and provide information as you go along, chances are you get worse performance rather than providing all the useful information at once. one the model's context fills with wrong information, it's more difficult to guide it to embrace the right info. agents pull information from tools, documents, user queries, etc. and there is a chance that some of these information contradict each other, and it's not good new for agentic applications. i tried my best to summarize this amazing short article by @dbreunig as he covers each failure in more details and with supporting research. takes less than X mins, do check it out:  XXXXX engagements  **Related Topics** [1m](/topic/1m) [context window](/topic/context-window) [Post Link](https://x.com/Hesamation/status/1948292660889825557)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

ℏεsam @Hesamation on x 31.3K followers

Created: 2025-07-24 08:02:22 UTC

ℏεsam @Hesamation on x 31.3K followers

Created: 2025-07-24 08:02:22 UTC

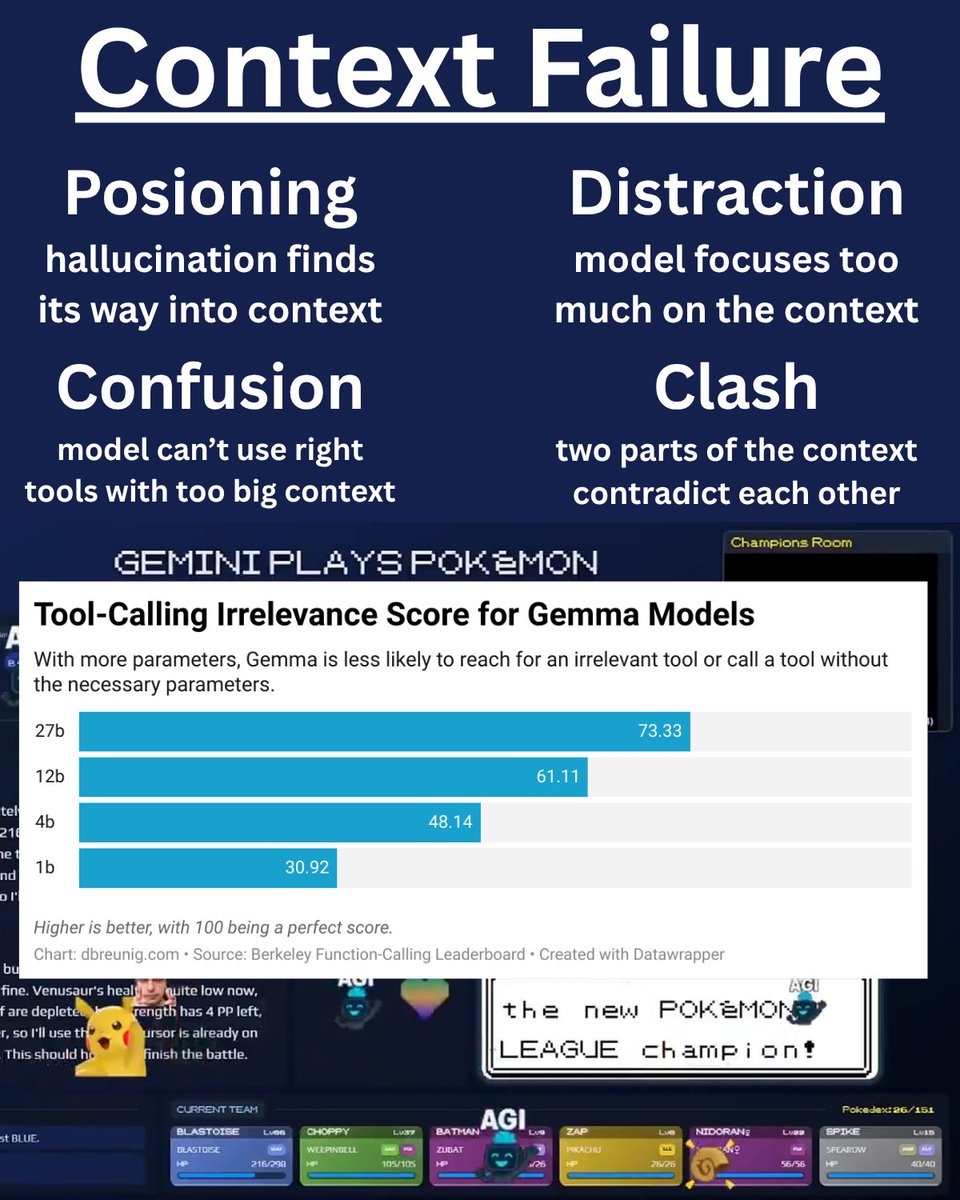

longer context doesn't generate better responses. it can even hurt your llm/agent. 1M context window doesn't automatically make models smarter as it's not about the size; it's how you use it.

here are X types of context failure and why each one happens:

X. context poisoning: if hallucination finds its way into your context, the agent will rely on that false information to make its future moves. for example if the agent hallucinates about the "task description", all of its planning to solve the task would also be corrupt.

X. context distraction: when the context becomes too bloated, the model focuses too much on it rather than come up with novel ideas or to follow what it has learned during training. as Gemini XXX Pro technical report points out, as context grows significantly from 100K tokens, "the agent showed a tendency toward favoring repeating actions from its vast history rather than synthesizing novel plans".

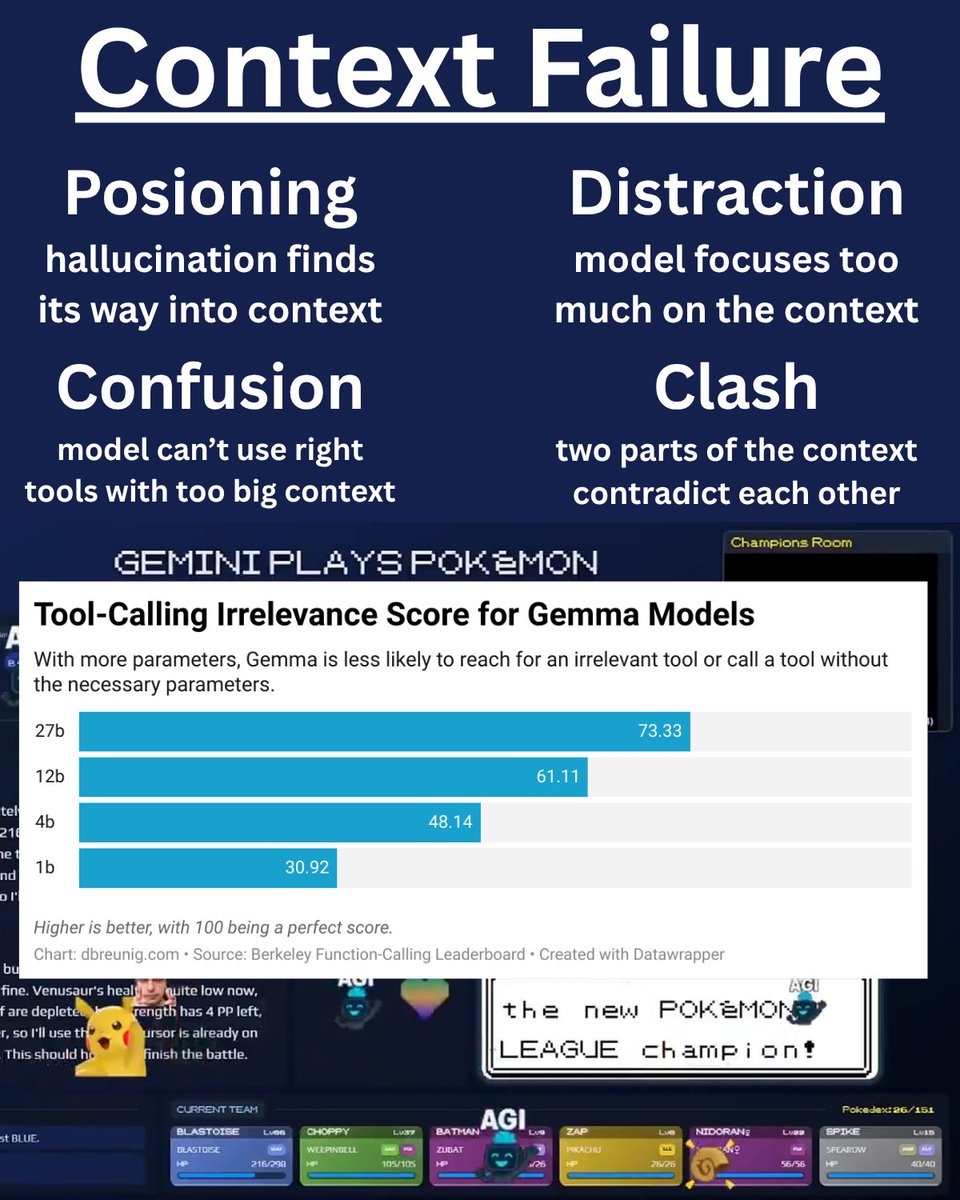

X. context confusion: everyone lost it when MCPs became popular, it seemed like AGI was achieved. I suspected there is something wrong and there was: it's not just about providing tools, bloating the context with tool use derails the model from selecting the right one! even if you can fit all your tool metadata in the context, as their number grows, the model gets confused over which one to pick.

X. Context Clash: if you exchange conversation with a model step by step and provide information as you go along, chances are you get worse performance rather than providing all the useful information at once. one the model's context fills with wrong information, it's more difficult to guide it to embrace the right info. agents pull information from tools, documents, user queries, etc. and there is a chance that some of these information contradict each other, and it's not good new for agentic applications.

i tried my best to summarize this amazing short article by @dbreunig as he covers each failure in more details and with supporting research. takes less than X mins, do check it out:

XXXXX engagements

Related Topics 1m context window