[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  AI Native Foundation [@AINativeF](/creator/twitter/AINativeF) on x 1913 followers Created: 2025-07-22 00:51:18 UTC X. Mono-InternVL-1.5: Towards Cheaper and Faster Monolithic Multimodal Large Language Models 🔑 Keywords: Mono-InternVL, Multimodal Large Language Models, visual experts, delta tuning, EViP++ 💡 Category: Multi-Modal Learning 🌟 Research Objective: - To address instability and catastrophic forgetting in monolithic Multimodal Large Language Models by introducing a new visual parameter space and Enhanced Visual Pre-training strategies. 🛠️ Research Methods: - Employing a multimodal mixture-of-experts architecture with delta tuning for stable learning of visual data. - Development of the advanced Mono-InternVL and its improved version, Mono-InternVL-1.5, featuring updated pre-training processes and efficient MoE operations. 💬 Research Conclusions: - Mono-InternVL significantly outperforms other monolithic MLLMs in terms of performance across multiple benchmarks. Mono-InternVL-1.5 offers cost-effective training and maintains competitive performance, with notable improvements such as reduced first-token latency. 👉 Paper link:  XX engagements  **Related Topics** [delta](/topic/delta) [coins ai](/topic/coins-ai) [Post Link](https://x.com/AINativeF/status/1947459401897652294)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

AI Native Foundation @AINativeF on x 1913 followers

Created: 2025-07-22 00:51:18 UTC

AI Native Foundation @AINativeF on x 1913 followers

Created: 2025-07-22 00:51:18 UTC

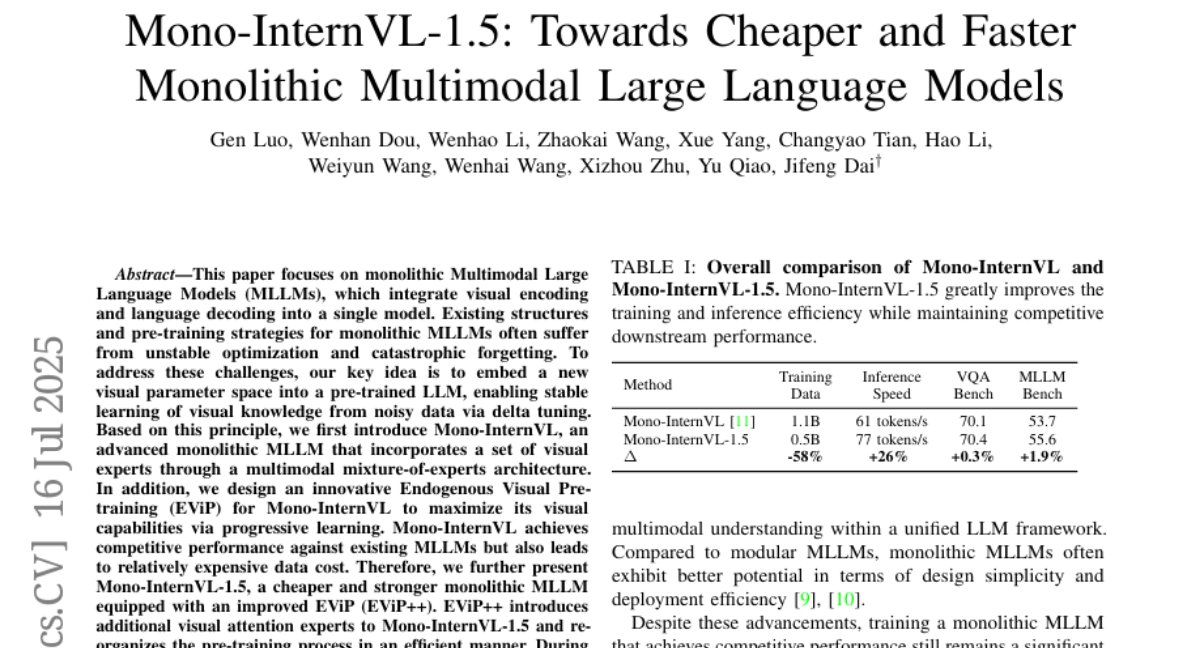

X. Mono-InternVL-1.5: Towards Cheaper and Faster Monolithic Multimodal Large Language Models

🔑 Keywords: Mono-InternVL, Multimodal Large Language Models, visual experts, delta tuning, EViP++

💡 Category: Multi-Modal Learning

🌟 Research Objective:

- To address instability and catastrophic forgetting in monolithic Multimodal Large Language Models by introducing a new visual parameter space and Enhanced Visual Pre-training strategies.

🛠️ Research Methods:

- Employing a multimodal mixture-of-experts architecture with delta tuning for stable learning of visual data.

- Development of the advanced Mono-InternVL and its improved version, Mono-InternVL-1.5, featuring updated pre-training processes and efficient MoE operations.

💬 Research Conclusions:

- Mono-InternVL significantly outperforms other monolithic MLLMs in terms of performance across multiple benchmarks. Mono-InternVL-1.5 offers cost-effective training and maintains competitive performance, with notable improvements such as reduced first-token latency.

👉 Paper link:

XX engagements