[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Mike [@MikeLongTerm](/creator/twitter/MikeLongTerm) on x 7872 followers Created: 2025-07-19 19:47:50 UTC $AMD $NVDA 2025-2026 #AI Chip⚔️ With reopening China market, NVDA and AMD should have higher growth than Q1 ER guidance. I'm revising my projection for $AMD 2026 revenue to $55B-$60B from $45B to $50B due to rising demand for current MI300 series, and orders for MI350 series. Stargate $500B Project and major buyers like @Meta @OpenAI @Microsoft @Oracle. The most likely outcome will be $TSM cannot supply enough #AI chips for $AMD to sell, that would leave an upside for 2027 backlog. This is conservative projection. It is difficult to say how much $AMD would price MI400 series comparing to $NVDA chips. If $AMD were to charge even just 10-20% cheaper than $NVDA Chips, we would see $30B+ a quarter if $TSM Manages to produce enough supply. Currently, analysts are saying $TSM is way behind on production for both $NVDA and $AMD even with recent push of $100B more fabs in Pheonix. Here are some comparision: Compute Performance X. The flagship @AMD MI400X is projected to deliver XX petaflops (PFLOPs) at FP4 precision and XX PFLOPs at FP8 precision, doubling the compute capability of the MI350 series. AMD claims the MI400X will be 10x more powerful than the MI300X for AI inference on Mixture of Experts models, representing a significant leap in performance. It features up to X Accelerated Compute Dies (XCDs), compared to X in the MI300, enhancing compute density. X. @nvidia Blackwell B200 offers approximately XXXXX teraflops (5 PFLOPs) at FP16 under sparse computing conditions. The upcoming Rubin R100, expected in late 2026, is projected to deliver XX PFLOPs at FP4, outpacing the MI400X by about XX% in raw compute performance. $NVDA ’s GPUs benefit from optimized software stacks like CUDA and TensorRT, which often achieve higher utilization (e.g., XX% of theoretical FLOPs) compared to AMD’s ROCm, which currently achieves less than XX% for the MI300 series. Memory Capacity and Bandwidth X. $AMD MI400X will feature XXX GB of HBM4 memory with a bandwidth of XXXX TB/s, a significant upgrade from the MI350’s XXX GB HBM3E at X TB/s. This represents a XX% increase in memory capacity and over 2.4x the bandwidth of the MI350 series. The high memory capacity and bandwidth make the MI400X particularly appealing for memory-intensive AI workloads, such as large-scale AI training and inference. X. $NVDA NVIDIA’s Blackwell B200 has XXX GB of HBM3E memory with X TB/s bandwidth. The GB200 Superchip, which pairs two Blackwell GPUs, offers XXX GB of memory, slightly less than the MI400X’s XXX GB. The Rubin R100 is expected to use HBM4 as well, but specific memory capacity and bandwidth details are not yet confirmed. However, AMD claims the MI400X will offer higher memory capacity and bandwidth than Rubin. System-Level Performance: X. $AMD MI400 series will power AMD’s Helios rack-scale AI system, integrating up to XX MI400 GPUs with EPYC Venice CPUs (up to XXX cores) and Pensando Vulcano network cards. This system is designed for high-density AI clusters and is expected to outperform NVIDIA’s Blackwell Ultra-based NVL72 in memory bandwidth and capacity, though it may lag behind the Rubin-based NVL144 in compute performance. X. $NVDA ’s NVL72 and NVL144 systems leverage NVLink for low-latency, high-bandwidth interconnects, providing a significant advantage in multi-GPU scaling compared to AMD’s reliance on Ethernet for MI350-based systems. The MI400’s Helios system aims to close this gap with advanced interconnects, but details on its networking performance are limited. Analysis: AMD’s Helios system is a step toward competing with NVIDIA’s rack-scale solutions, but NVIDIA’s NVLink ecosystem may still provide better scalability for large clusters. The MI400’s memory advantages could make Helios more attractive for specific workloads. Software Ecosystem: X. $AMD ’s ROCm software stack is improving but lags behind NVIDIA’s CUDA in maturity and adoption. For the MI300X, software limitations result in lower FLOPs utilization (~30% vs. NVIDIA’s 40%). AMD is focusing on optimizing ROCm for large-scale workloads, which could benefit the MI400 series, but it remains a challenge for broader adoption. X. $NVDA CUDA and TensorRT provide a significant competitive advantage, enabling better performance optimization and broader support for AI frameworks. This makes NVIDIA GPUs more versatile for smaller enterprises and research workloads. Analysis: NVIDIA’s software ecosystem remains a key differentiator, potentially limiting the MI400’s appeal for customers reliant on CUDA. However, hyperscale customers (e.g., Meta, Microsoft) with resources to optimize ROCm may find the MI400’s hardware compelling. Lastly, while we don't know how much $AMD will be pricing for MI400 Series, but it is rumored from Mega companies that it would be XX% cheaper than $NVDA chips. Analysts are expecting $AMD to take market share in the coming months and years from $NVDA.  XXXXX engagements  **Related Topics** [coins ai](/topic/coins-ai) [stocks](/topic/stocks) [$18b](/topic/$18b) [$knpa](/topic/$knpa) [oracle](/topic/oracle) [open ai](/topic/open-ai) [meta](/topic/meta) [$500b](/topic/$500b) [Post Link](https://x.com/MikeLongTerm/status/1946658256753250595)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Mike @MikeLongTerm on x 7872 followers

Created: 2025-07-19 19:47:50 UTC

Mike @MikeLongTerm on x 7872 followers

Created: 2025-07-19 19:47:50 UTC

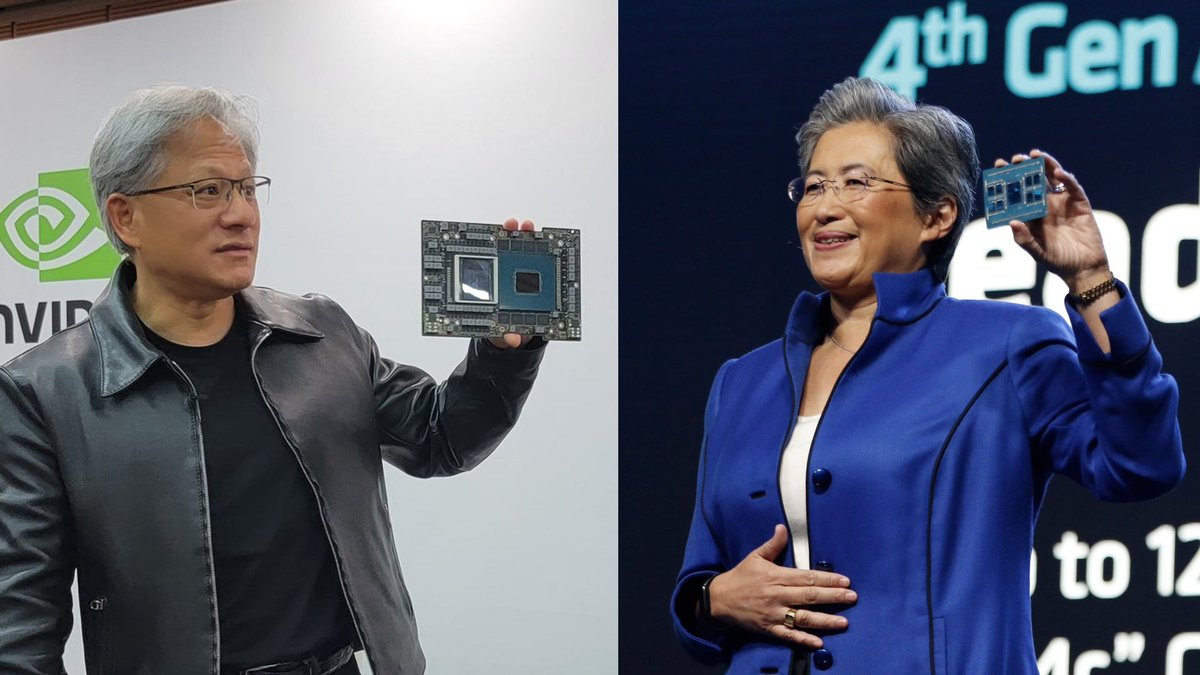

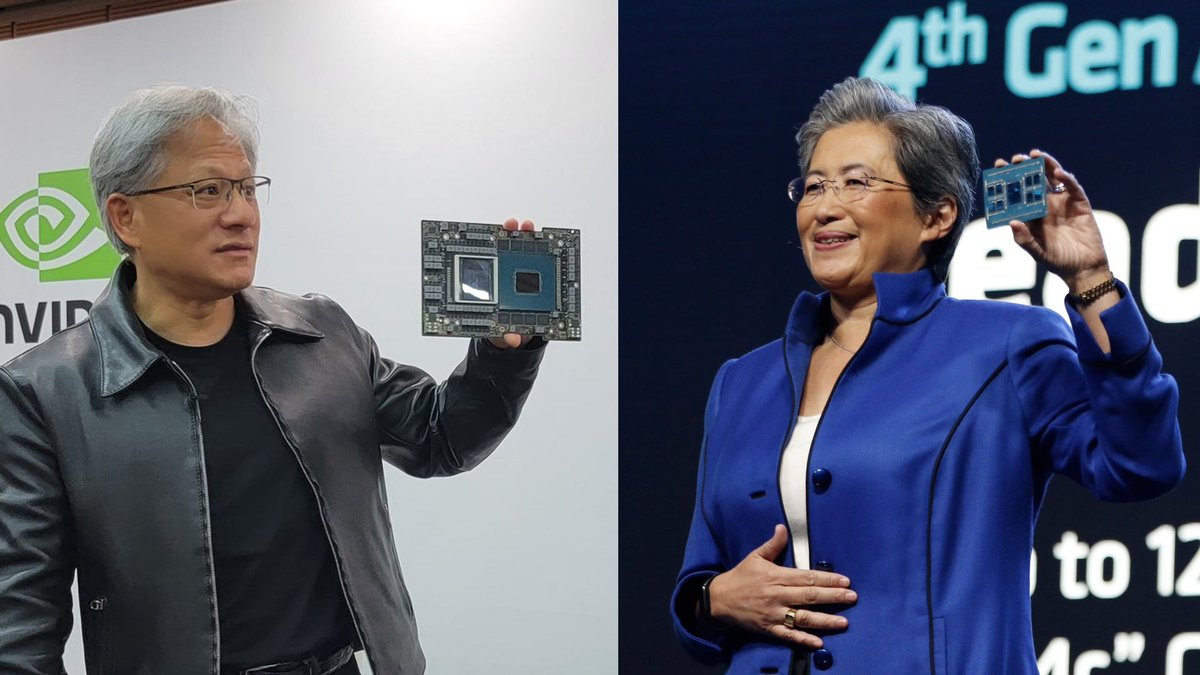

$AMD $NVDA 2025-2026 #AI Chip⚔️

With reopening China market, NVDA and AMD should have higher growth than Q1 ER guidance.

I'm revising my projection for $AMD 2026 revenue to $55B-$60B from $45B to $50B due to rising demand for current MI300 series, and orders for MI350 series.

Stargate $500B Project and major buyers like @Meta @OpenAI @Microsoft @Oracle. The most likely outcome will be $TSM cannot supply enough #AI chips for $AMD to sell, that would leave an upside for 2027 backlog.

This is conservative projection. It is difficult to say how much $AMD would price MI400 series comparing to $NVDA chips. If $AMD were to charge even just 10-20% cheaper than $NVDA Chips, we would see $30B+ a quarter if $TSM Manages to produce enough supply. Currently, analysts are saying $TSM is way behind on production for both $NVDA and $AMD even with recent push of $100B more fabs in Pheonix.

Here are some comparision:

Compute Performance

X. The flagship @AMD MI400X is projected to deliver XX petaflops (PFLOPs) at FP4 precision and XX PFLOPs at FP8 precision, doubling the compute capability of the MI350 series. AMD claims the MI400X will be 10x more powerful than the MI300X for AI inference on Mixture of Experts models, representing a significant leap in performance. It features up to X Accelerated Compute Dies (XCDs), compared to X in the MI300, enhancing compute density.

X. @nvidia Blackwell B200 offers approximately XXXXX teraflops (5 PFLOPs) at FP16 under sparse computing conditions. The upcoming Rubin R100, expected in late 2026, is projected to deliver XX PFLOPs at FP4, outpacing the MI400X by about XX% in raw compute performance.

$NVDA ’s GPUs benefit from optimized software stacks like CUDA and TensorRT, which often achieve higher utilization (e.g., XX% of theoretical FLOPs) compared to AMD’s ROCm, which currently achieves less than XX% for the MI300 series.

Memory Capacity and Bandwidth

X. $AMD MI400X will feature XXX GB of HBM4 memory with a bandwidth of XXXX TB/s, a significant upgrade from the MI350’s XXX GB HBM3E at X TB/s. This represents a XX% increase in memory capacity and over 2.4x the bandwidth of the MI350 series. The high memory capacity and bandwidth make the MI400X particularly appealing for memory-intensive AI workloads, such as large-scale AI training and inference.

X. $NVDA NVIDIA’s Blackwell B200 has XXX GB of HBM3E memory with X TB/s bandwidth. The GB200 Superchip, which pairs two Blackwell GPUs, offers XXX GB of memory, slightly less than the MI400X’s XXX GB. The Rubin R100 is expected to use HBM4 as well, but specific memory capacity and bandwidth details are not yet confirmed. However, AMD claims the MI400X will offer higher memory capacity and bandwidth than Rubin.

System-Level Performance:

X. $AMD MI400 series will power AMD’s Helios rack-scale AI system, integrating up to XX MI400 GPUs with EPYC Venice CPUs (up to XXX cores) and Pensando Vulcano network cards. This system is designed for high-density AI clusters and is expected to outperform NVIDIA’s Blackwell Ultra-based NVL72 in memory bandwidth and capacity, though it may lag behind the Rubin-based NVL144 in compute performance.

X. $NVDA ’s NVL72 and NVL144 systems leverage NVLink for low-latency, high-bandwidth interconnects, providing a significant advantage in multi-GPU scaling compared to AMD’s reliance on Ethernet for MI350-based systems. The MI400’s Helios system aims to close this gap with advanced interconnects, but details on its networking performance are limited. Analysis: AMD’s Helios system is a step toward competing with NVIDIA’s rack-scale solutions, but NVIDIA’s NVLink ecosystem may still provide better scalability for large clusters. The MI400’s memory advantages could make Helios more attractive for specific workloads.

Software Ecosystem:

X. $AMD ’s ROCm software stack is improving but lags behind NVIDIA’s CUDA in maturity and adoption. For the MI300X, software limitations result in lower FLOPs utilization (~30% vs. NVIDIA’s 40%). AMD is focusing on optimizing ROCm for large-scale workloads, which could benefit the MI400 series, but it remains a challenge for broader adoption.

X. $NVDA CUDA and TensorRT provide a significant competitive advantage, enabling better performance optimization and broader support for AI frameworks. This makes NVIDIA GPUs more versatile for smaller enterprises and research workloads.

Analysis: NVIDIA’s software ecosystem remains a key differentiator, potentially limiting the MI400’s appeal for customers reliant on CUDA. However, hyperscale customers (e.g., Meta, Microsoft) with resources to optimize ROCm may find the MI400’s hardware compelling.

Lastly, while we don't know how much $AMD will be pricing for MI400 Series, but it is rumored from Mega companies that it would be XX% cheaper than $NVDA chips.

Analysts are expecting $AMD to take market share in the coming months and years from $NVDA.

XXXXX engagements

Related Topics coins ai stocks $18b $knpa oracle open ai meta $500b