[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Rohan Paul [@rohanpaul_ai](/creator/twitter/rohanpaul_ai) on x 74K followers Created: 2025-07-14 14:55:00 UTC External prompt loops make LLM agents chat with themselves again and again, wasting tokens. INoT, proposed in this paper, moves the whole debate inside the model with a readable mix of code and text. Natural language prompts get misread and each extra round needs fresh input and output. That cost piles up fast when tasks need reflection, critique, and fixes. INoT introduces PromptCode, a light python‑like script sprinkled with plain words so the model parses steps exactly. The prompt defines the language then embeds a debate program where two virtual agents reason, critique, rebut, and agree. An optional Image Augment chunk teaches multimodal models to list objects, lighting, context, and uncertainty before reasoning about pictures. Across X benchmarks spanning math, code, and QA, accuracy rises by XXXX% on average while tokens drop 58.3%. It even boosts image QA by up to X% and stays steady across X different base models. So performance climbs and wallets breathe easier. Key idea: teach the model the rules once, let it police its own thoughts, and avoid repeated API calls. ---- Paper – arxiv. org/abs/2507.08664 Paper Title: "Introspection of Thought Helps AI Agents"  XXXXX engagements  **Related Topics** [llm](/topic/llm) [Post Link](https://x.com/rohanpaul_ai/status/1944772624565051466)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Rohan Paul @rohanpaul_ai on x 74K followers

Created: 2025-07-14 14:55:00 UTC

Rohan Paul @rohanpaul_ai on x 74K followers

Created: 2025-07-14 14:55:00 UTC

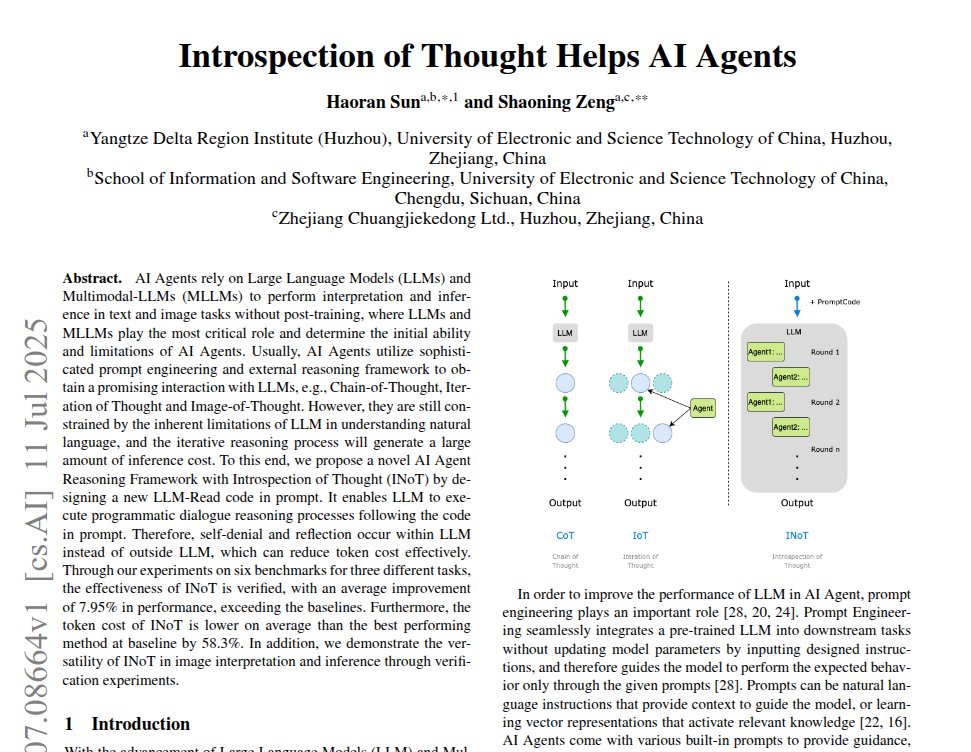

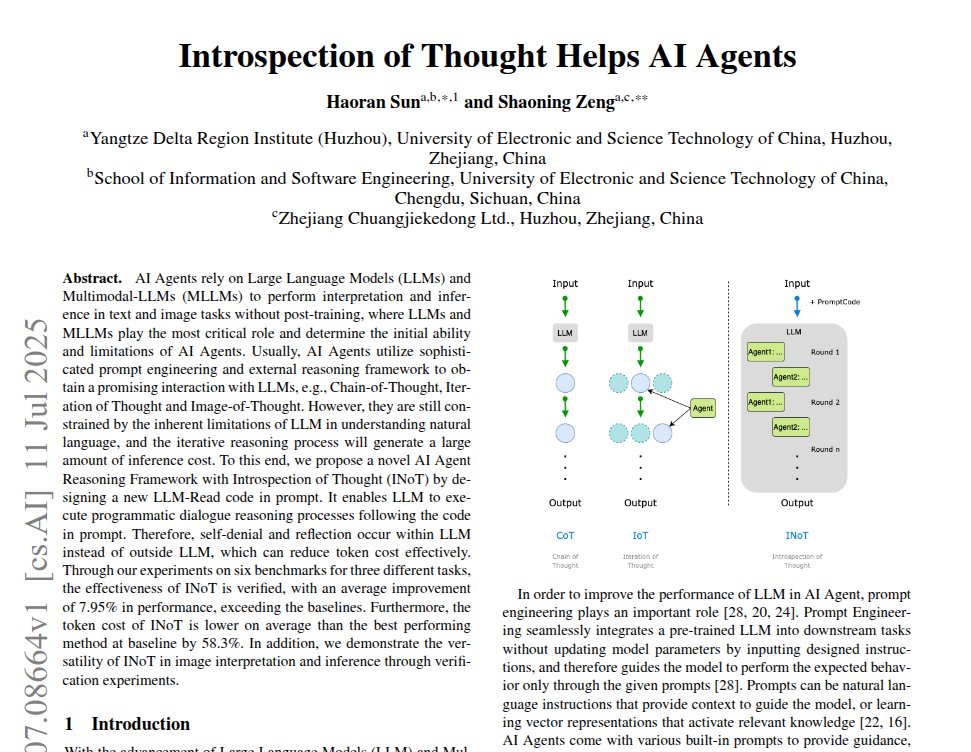

External prompt loops make LLM agents chat with themselves again and again, wasting tokens.

INoT, proposed in this paper, moves the whole debate inside the model with a readable mix of code and text.

Natural language prompts get misread and each extra round needs fresh input and output.

That cost piles up fast when tasks need reflection, critique, and fixes.

INoT introduces PromptCode, a light python‑like script sprinkled with plain words so the model parses steps exactly.

The prompt defines the language then embeds a debate program where two virtual agents reason, critique, rebut, and agree.

An optional Image Augment chunk teaches multimodal models to list objects, lighting, context, and uncertainty before reasoning about pictures.

Across X benchmarks spanning math, code, and QA, accuracy rises by XXXX% on average while tokens drop 58.3%.

It even boosts image QA by up to X% and stays steady across X different base models.

So performance climbs and wallets breathe easier.

Key idea: teach the model the rules once, let it police its own thoughts, and avoid repeated API calls.

Paper – arxiv. org/abs/2507.08664

Paper Title: "Introspection of Thought Helps AI Agents"

XXXXX engagements

Related Topics llm