[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Lukas Ziegler [@lukas_m_ziegler](/creator/twitter/lukas_m_ziegler) on x 10.4K followers Created: 2025-07-03 14:49:28 UTC Quadrupeds are fast. Agile. Great at locomotion. But can they manipulate? A new approach from Carnegie Mellon University, Google DeepMind, and Bosch is teaching quadrupedal robots to do more than walk, they’re learning to interact. It’s called Human2LocoMan: a system that uses human data to pretrain robot policies before finetuning on real hardware. The result? A four-legged robot that can walk, carry, organize, scoop, and sort with both single and dual-arm control. By pretraining on human motion, they cut the amount of robot data in half—while improving success rates by over XX% in unfamiliar environments. Their Modularized Cross-Embodiment Transformer (MXT) learns from both human and robot demonstrations, then generalizes those skills to physical tasks—no hardcoded behaviors required. It’s locomotion and manipulation. A quadruped that can walk and clean up after itself?  XXXXXX engagements  **Related Topics** [hardware](/topic/hardware) [robot](/topic/robot) [$boschltdbo](/topic/$boschltdbo) [$googl](/topic/$googl) [stocks communication services](/topic/stocks-communication-services) [Post Link](https://x.com/lukas_m_ziegler/status/1940784965777543199)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Lukas Ziegler @lukas_m_ziegler on x 10.4K followers

Created: 2025-07-03 14:49:28 UTC

Lukas Ziegler @lukas_m_ziegler on x 10.4K followers

Created: 2025-07-03 14:49:28 UTC

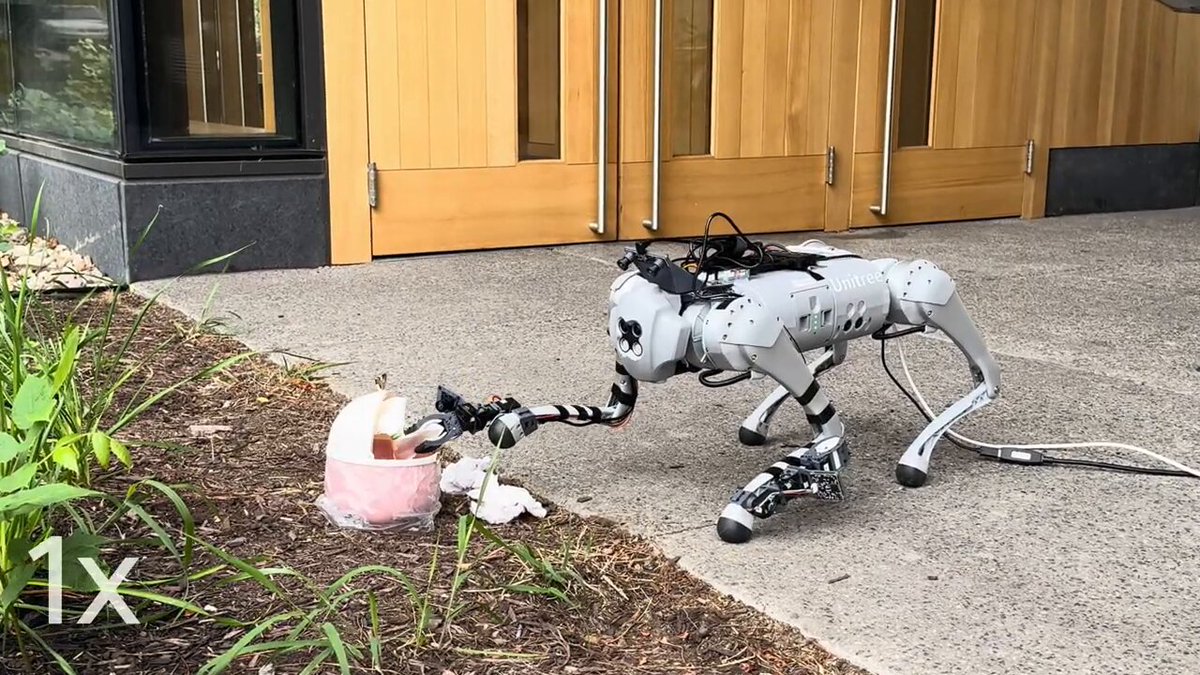

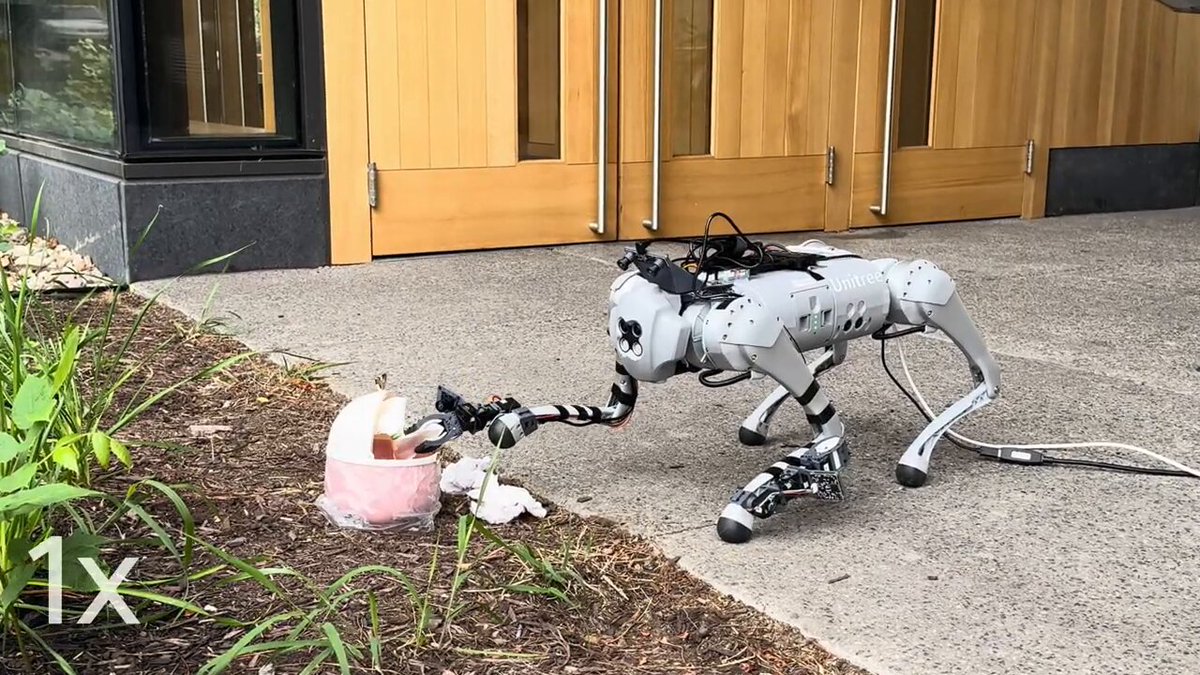

Quadrupeds are fast. Agile. Great at locomotion.

But can they manipulate?

A new approach from Carnegie Mellon University, Google DeepMind, and Bosch is teaching quadrupedal robots to do more than walk, they’re learning to interact.

It’s called Human2LocoMan: a system that uses human data to pretrain robot policies before finetuning on real hardware.

The result? A four-legged robot that can walk, carry, organize, scoop, and sort with both single and dual-arm control.

By pretraining on human motion, they cut the amount of robot data in half—while improving success rates by over XX% in unfamiliar environments.

Their Modularized Cross-Embodiment Transformer (MXT) learns from both human and robot demonstrations, then generalizes those skills to physical tasks—no hardcoded behaviors required.

It’s locomotion and manipulation.

A quadruped that can walk and clean up after itself?

XXXXXX engagements

Related Topics hardware robot $boschltdbo $googl stocks communication services