[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Rohan Paul [@rohanpaul_ai](/creator/twitter/rohanpaul_ai) on x 74.1K followers Created: 2025-06-30 03:55:10 UTC 🚨 CHINA’S BIGGEST PUBLIC AI DROP SINCE DEEPSEEK @Baidu_Inc open source Ernie, XX multimodal MoE variants 🔥 Surpasses DeepSeek-V3-671B-A37B-Base on XX out of XX benchmarks 🔓 All weights and code released under the commercially friendly Apache XXX license (available on @huggingface ) thinking mode and non-thinking modes available 📊 The 21B-A3B model beats Qwen3-30B on math and reasoning despite using XX% fewer parameters 🧩 XX released variants range from 0.3B dense to 424B total parameters. Only 47B or 3B stay active params, thanks to mixture-of-experts routing 🔀 A heterogeneous MoE layout sends text and image tokens to separate expert pools while shared experts learn cross-modal links, so the two media strengthen rather than hinder each other ⚡ Intra-node expert parallelism, FP8 precision and fine-grained recomputation lift pre-training to XX% model FLOPs utilization on the biggest run, showing strong hardware efficiency 🖼️ Vision-language versions add thinking and non-thinking modes, topping MathVista, MMMU and document-chart tasks while keeping strong perception skills 🛠️ ERNIEKit offers LoRA, DPO, UPO and quantization for fine-tuning, and FastDeploy serves low-bit multi-machine inference with a single command ⚖️ Multi-expert parallel collaboration plus 4-bit and 2-bit lossless quantization cut inference cost without hurting accuracy  XXXXXX engagements  **Related Topics** [coins ai](/topic/coins-ai) [Post Link](https://x.com/rohanpaul_ai/status/1939533142445953078)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Rohan Paul @rohanpaul_ai on x 74.1K followers

Created: 2025-06-30 03:55:10 UTC

Rohan Paul @rohanpaul_ai on x 74.1K followers

Created: 2025-06-30 03:55:10 UTC

🚨 CHINA’S BIGGEST PUBLIC AI DROP SINCE DEEPSEEK

@Baidu_Inc open source Ernie, XX multimodal MoE variants

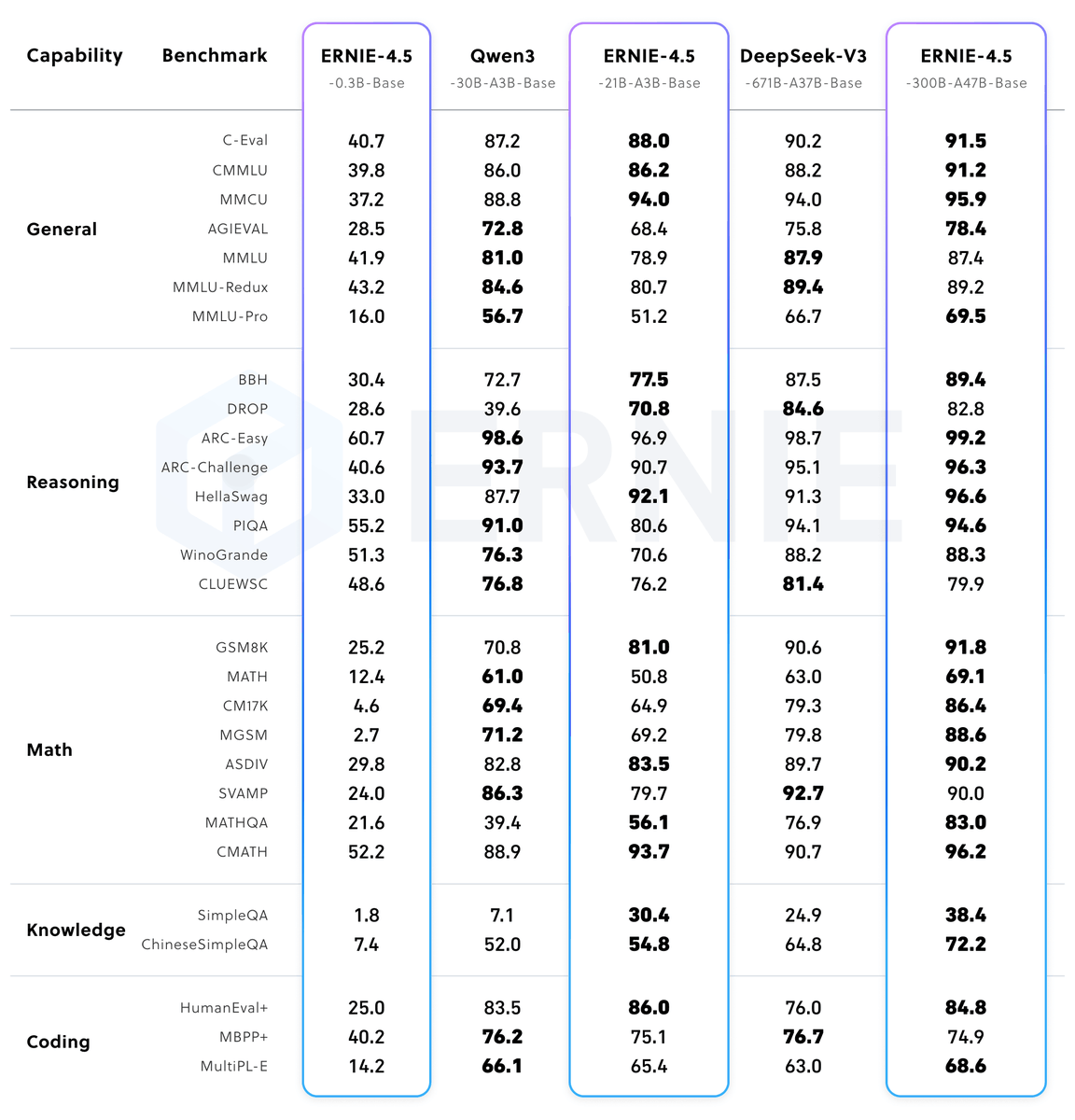

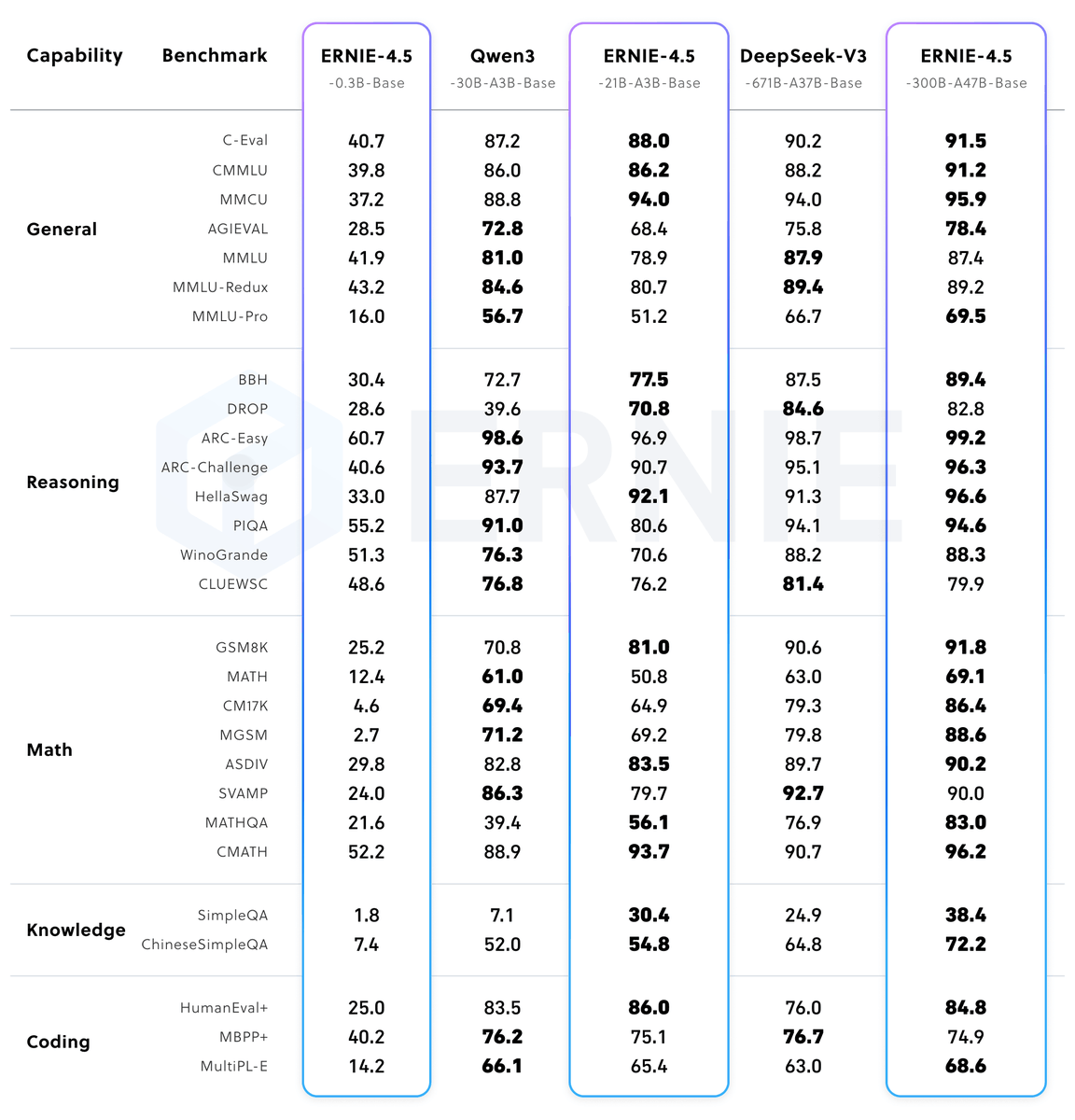

🔥 Surpasses DeepSeek-V3-671B-A37B-Base on XX out of XX benchmarks

🔓 All weights and code released under the commercially friendly Apache XXX license (available on @huggingface )

thinking mode and non-thinking modes available

📊 The 21B-A3B model beats Qwen3-30B on math and reasoning despite using XX% fewer parameters

🧩 XX released variants range from 0.3B dense to 424B total parameters. Only 47B or 3B stay active params, thanks to mixture-of-experts routing

🔀 A heterogeneous MoE layout sends text and image tokens to separate expert pools while shared experts learn cross-modal links, so the two media strengthen rather than hinder each other

⚡ Intra-node expert parallelism, FP8 precision and fine-grained recomputation lift pre-training to XX% model FLOPs utilization on the biggest run, showing strong hardware efficiency

🖼️ Vision-language versions add thinking and non-thinking modes, topping MathVista, MMMU and document-chart tasks while keeping strong perception skills

🛠️ ERNIEKit offers LoRA, DPO, UPO and quantization for fine-tuning, and FastDeploy serves low-bit multi-machine inference with a single command

⚖️ Multi-expert parallel collaboration plus 4-bit and 2-bit lossless quantization cut inference cost without hurting accuracy

XXXXXX engagements

Related Topics coins ai