[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]  Akshay 🚀 [@akshay_pachaar](/creator/twitter/akshay_pachaar) on x 215.3K followers Created: 2025-05-29 13:03:31 UTC To understand KV caching, we must know how LLMs output tokens. - Transformer produces hidden states for all tokens. - Hidden states are projected to vocab space. - Logits of the last token is used to generate the next token. - Repeat for subsequent tokens. Check this👇  XXXXXX engagements  **Related Topics** [token](/topic/token) [Post Link](https://x.com/akshay_pachaar/status/1928074728063021378)

[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

Akshay 🚀 @akshay_pachaar on x 215.3K followers

Created: 2025-05-29 13:03:31 UTC

Akshay 🚀 @akshay_pachaar on x 215.3K followers

Created: 2025-05-29 13:03:31 UTC

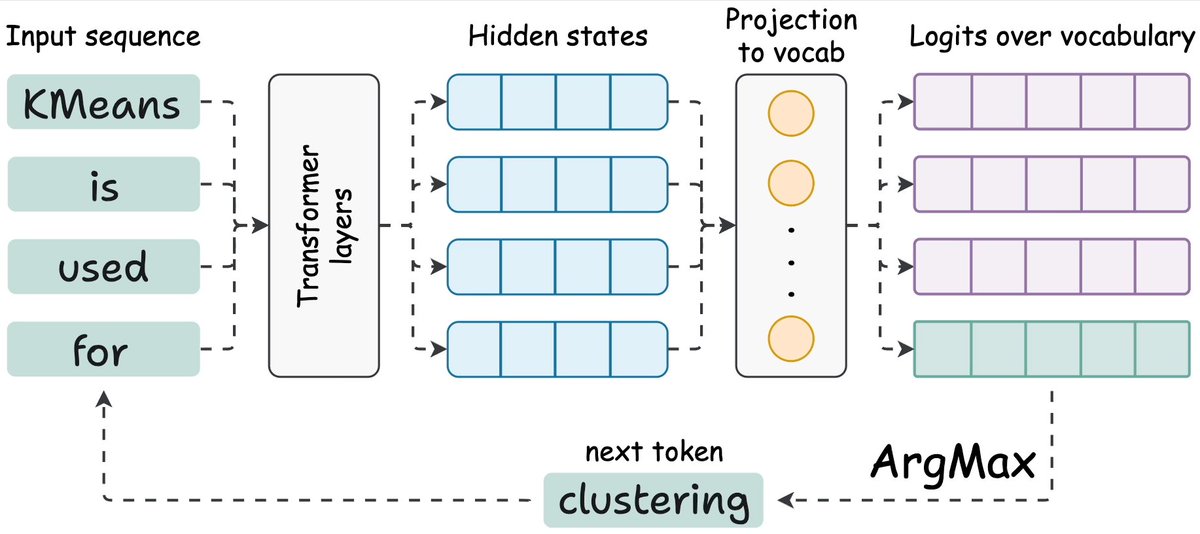

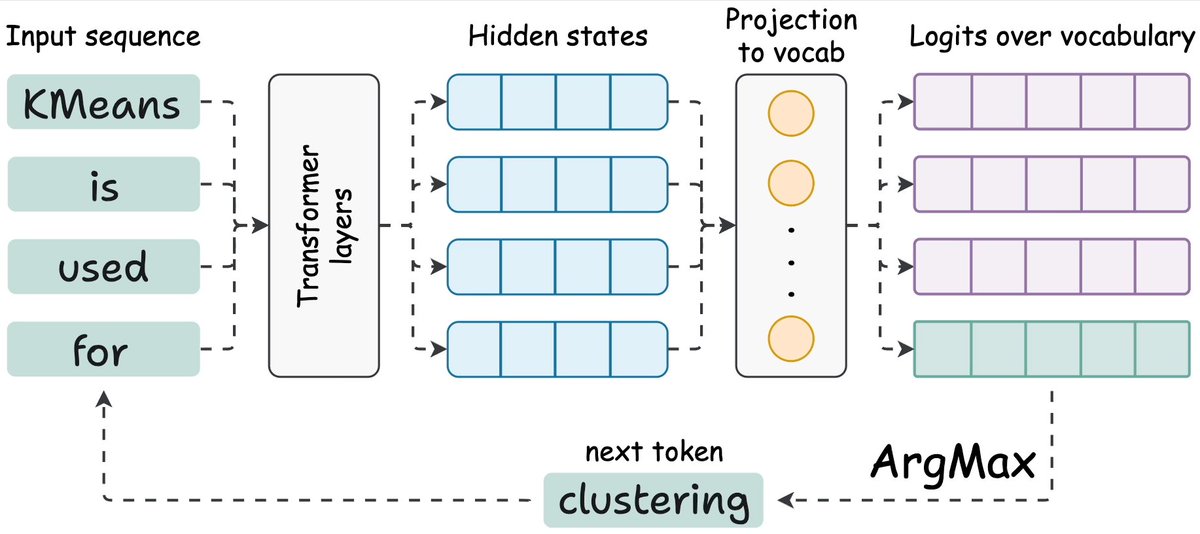

To understand KV caching, we must know how LLMs output tokens.

- Transformer produces hidden states for all tokens.

- Hidden states are projected to vocab space.

- Logits of the last token is used to generate the next token.

- Repeat for subsequent tokens.

Check this👇

XXXXXX engagements

Related Topics token