[GUEST ACCESS MODE: Data is scrambled or limited to provide examples. Make requests using your API key to unlock full data. Check https://lunarcrush.ai/auth for authentication information.]

David Shapiro ⏩ @DaveShapi on x 44.2K followers

Created: 2025-07-19 16:35:28 UTC

David Shapiro ⏩ @DaveShapi on x 44.2K followers

Created: 2025-07-19 16:35:28 UTC

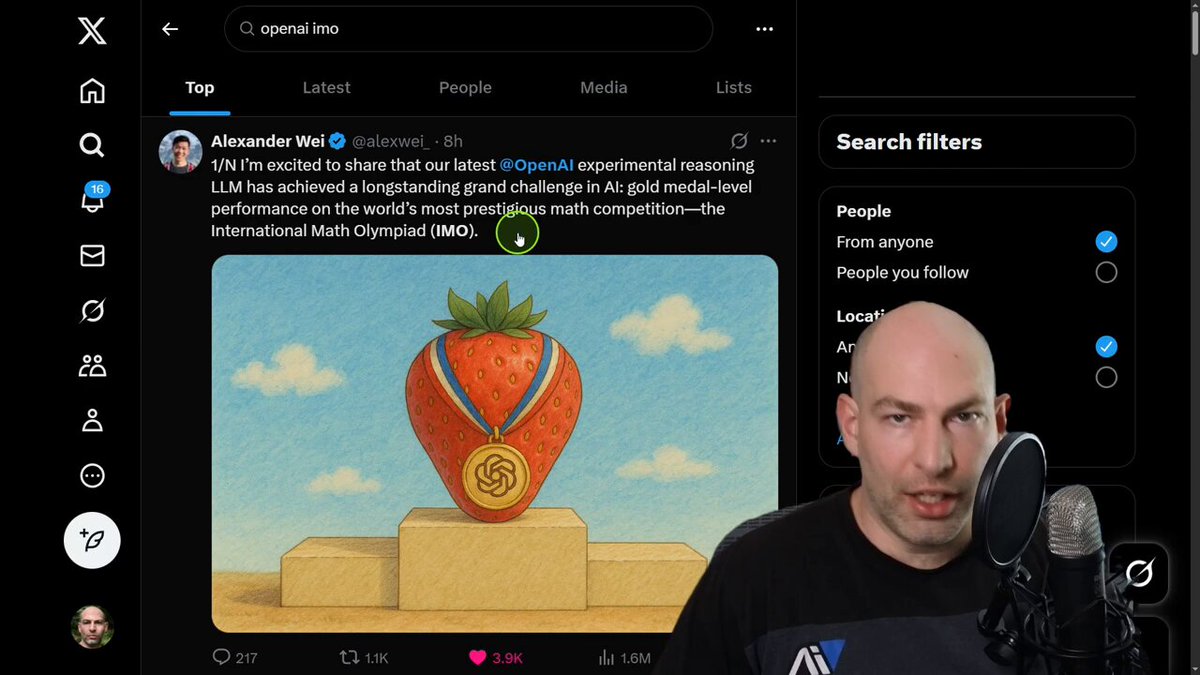

OpenAI Just Won Gold on the 2025 International Math Olympiad — BIGGEST AI NEWS ALL YEAR!

Hello everybody! It's been a hot minute since my last update—sorry for the radio silence. But today's news is so mind-blowing, I had to jump back in and unpack it for you all. Buckle up: OpenAI just dropped a bombshell with an internal experimental model that's a general-purpose reasoning beast. This thing wasn't even built for math, but it accidentally scored GOLD MEDAL level on the International Math Olympiad—the world's most prestigious math competition!

For context: A few months ago, their models didn't even place in the top ranks (like, bottom of the pile). Now? Gold. And they weren't trying to crack math—they were tinkering with "test-time compute" breakthroughs, aka inference-time scaling (think Strawberry/Q*, leading to o1 and o3 vibes). Result? A general reasoner that's better at math than most humans. Wild.

Flashback to April: When OpenAI dropped o4-mini benchmarks, I hyped it up saying they'd "solved math" on the AIME 2024 (high school/advanced level). Yeah, I was being hyperbolic for fun—got community-noted by Noam Brown himself ("We didn't solve math"). But my point? They saturated that benchmark completely. Directionally, once you hit those big jumps (from XX% to near-perfect), you know saturation is coming. History shows this in ML for decades: Big leaps mean you've cracked the domain.

Fast-forward: In just 2-3 months, they've nearly saturated the IMO. Not high school stuff—this is elite. Proves my take: Tipping points lead to mastery faster than expected.

Reactions? Prediction markets (Manifold) had AI gold in IMO 2025 at ~20% odds yesterday. Now? 85-86%. Total blindside—no leaks, pure surprise. Even OpenAI folks like Noam Brown and Alexander Wei seemed shocked: They were just experimenting with algorithms, and boom—math obliterated.

Hilarious side note: Gary Marcus (the Jim Cramer of AI) tweeted yesterday that AI wasn't even close to silver on IMO. Next day? Gold. Owned.

So, why care? This isn't niche. Math underpins ALL of STEM (Science, Technology, Engineering, Math). Master math = master quantum physics, high-energy stuff, even inventing new theories (Sam Altman's "discover new physics" dream). Experiments like LIGO or LHC? They spit out terabytes of data—crunched by math. AI itself? Linear algebra, matrices—all math. Better math AI = self-improving AI, like how it's already revolutionized coding.

Downstream: Raises the floor for everyone. Learn to code like a 5-year vet in weeks? Now, same for math—PhD-level tutor in your pocket. Broaden access: More people diving into STEM, cybersecurity, everything. Network effects kick in: AI proliferates like electricity (a classic general-purpose tech). Took decades for electricity to go from "what's this for?" to everywhere. Same here—still finding uses for transformers.

General-purpose tech traits: 1) Internal improvements (this is one). 2) Pervasive (infiltrates every industry: education, healthcare, military—already happening). 3) Compounding returns (virtuous cycles: AI helps itself + humans).

This leap screams acceleration toward post-labor economics. If you've followed my Substack, I'm refining a framework: Rise of automation, decline of labor, shifting power/social contracts, new KPIs, interventions, life after labor. Just dropped Part 1: "The Rise of Automation." It's the blueprint for my book The Great Decoupling with Julia McCoy.

Head to Substack for the full dive—comments, questions welcome! Helps refine the message. When general-purpose tech saturates benchmarks like this, steam picks up fast. AI everywhere incoming.

Thanks for reading—cheers! Have a good one. 🚀🤖 #AI #OpenAI #MathOlympiad #PostLaborEconomics

XXXXXX engagements